When using custom data as the source for Retrieval Augmented Generation (RAG) in Generative AI solutions, we can query grounding data in one of two ways:

- Using keyword search. This approach matches documents containing keywords provided in user prompts. This approach is ideal when the use case requires searching for specific information, such as technical information and concepts contained in the augmentation text.

- Using Vector Embeddings. This approach searches the document knowledge base for content that matches the meaning of the user prompt. This approach is ideal when the goal is to match the concepts in source documents--even if the user uses different wording and/or language grammar in their prompt.

In this article I discuss the reason why chunking is needed and selecting a splitting strategy that matches the needs of the source knowledge base. After discussion, I'll show some example code using OpenAI embedding models and LangChain for text splitting middleware.

Why we need to chunk text embeddings

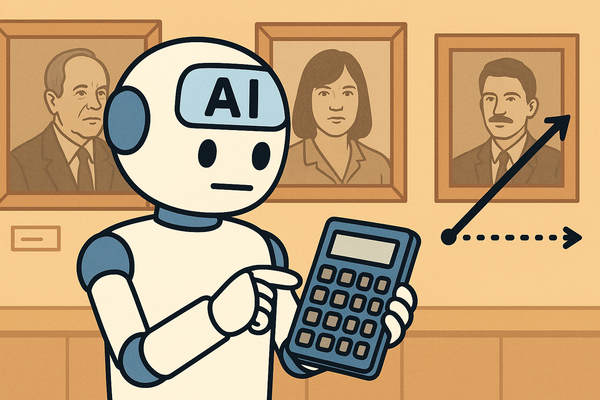

When using our own documents in a RAG strategy with Large Language Models (LLMs), we typically store vector representations of the source documents in a Vector Database.

Vector embeddings provide the ability to search text based on meanings rather than keywords. Typically vectors are stored in a specialized Vector database, such as Pinecone, or in a horizontal search platform that supports vector indexes, such as Azure Cognitive Search.

Vectors in a Vector Database have a predefined size (e.g. 1536 bytes), and each vector should be the same size. What if a source document is too large to be represented by a single vector? The most common solution to split the document into "chunks", create an embedding for each chunk, and store all the chunks into the vector database.

A business document may have dozens or hundreds (or more) vectors, each containing a small part of the original document.

Querying a vector database having chunks

To decide which document fragments to add to the Generative AI prompt, the query follows this general process:

- Create a vector of the user prompt text with the same algorithm that created embeddings of the original documents.

- Search the vector database for document chunks that are close to the meaning of the user prompt. In vector query terms, this means finding chunks that have vectors close to the vector version of the user prompt in a multidimensional space.

- Fetch the original text that created each of the matching vectors. Typically, this text is stored in the vector database as metadata and is readily available with the vector database query response.

- Add the returned text as the context for the prompt sent on to the Large Language Model (LLM) to use when creating (generating) the response.

Text Splitting Strategy

While the concept of the chunking strategy is easy to understand, there are nuances that affect the accuracy of vector query responses, and there are various chunking strategies that can be used.

Naïvely splitting with fixed chunk sizes

The simplest approach to creating text chunks is to split a source document into blocks with an appropriate maximum number of bytes in each chunk. For example, we might use a text splitter that breaks a long document into chunks where each chunk is a fixed number of characters.

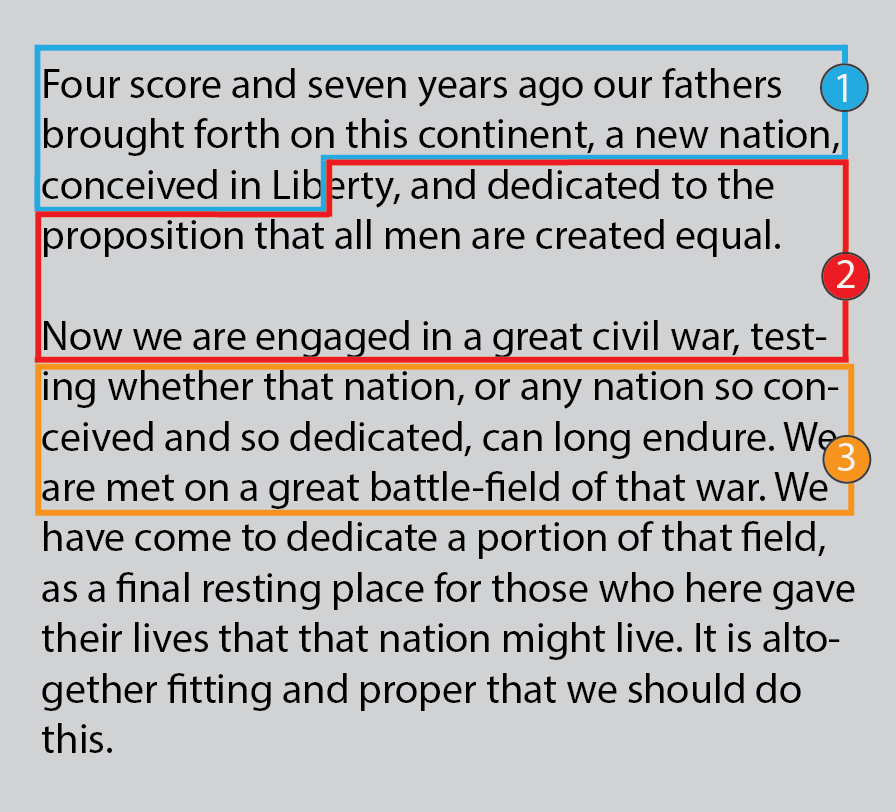

This strategy can be illustrated in the following diagram, where the first three chunks of the Gettysburg Address are outlined in blue, red and orange.

The embedding process would then create a single embedding vector for each chunk. All the chunks would be stored in the vector database, and as users asked about Lincoln's speeches, the vector query would return the original text (and other metadata) for vectors matching the meaning of the user's prompt.

But there's a serious problem with this naïve strategy--do you see it? It's the way text was split into chunk 1 and 2.

Consider the following prompt:

Would the vector search realize the Gettysburg speech does, in fact, include discussion of "Liberty"?

Probably not. Vector 1 includes the word "Lib", and vector 2 includes the word "erty". Neither of these are likely to be considered close to the concept "Liberty" in the original prompt.

Context Overlap

Clearly, we need a better strategy. There are several approaches, but one that's easy to understand is using context overlap.

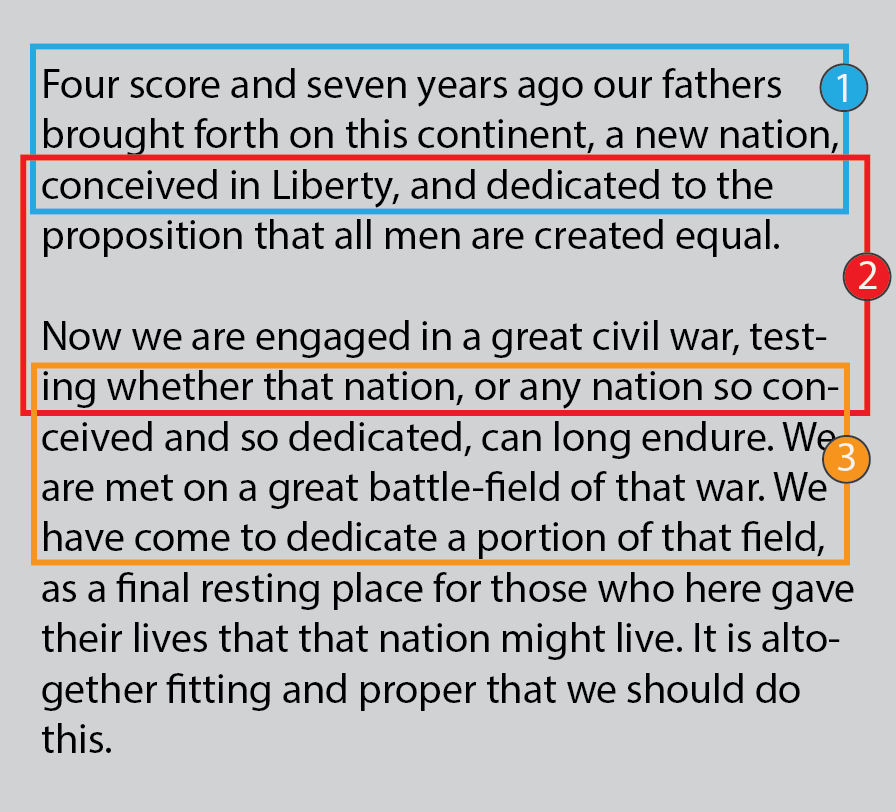

In this approach, rather than splitting chunks at exactly the fixed number of characters, we could instead use chunks that overlap from one to the next. In this way, a chunk still may begin or end with a partial word, but the chunk adjacent to it will contain the entire word.

For example, in the following document chunked with overlap, the word "testing" has been split in chunk 3 (to "ing"), but it is fully contained in chunk number 2. In a search for "testing" vector number 3 wouldn't be returned, but vector 2 would be returned.

While this is a simple example, it illustrates the idea that we need to devise chunking strategies carefully.

Implementing Text Splitting with LangChain

The following code illustrates the code to implement chunking a PDF file using LangChain.

Add Dependencies

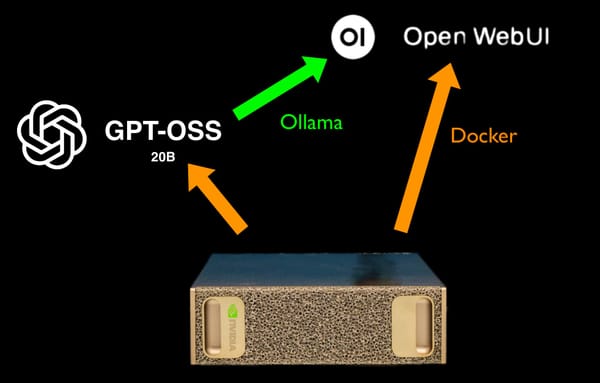

First we need to include LangChain dependencies to gain access to a text splitter that can read PDF files and implements a splitter with overlap. Since I'll use the OpenAI text-embedding-ada-002 LLM to create embeddings, some OpenAI imports are added a well.

from langchain.embeddings.openai import OpenAIEmbeddings

from langchain.text_splitter import RecursiveCharacterTextSplitter

from langchain.document_loaders import PyPDFLoader

import openaiCreate an OpenAI Embedding Object

Next we'll need an object to create embeddings given chunks of text. I'll use LangChain's OpenAIEmbeddings module, passing in my OpenAI Key for authentication.

embed = OpenAIEmbeddings(

model = 'text-embedding-ada-002',

openai_api_key= OPENAI_KEY)Load the PDF Document

# Load a single document using LangChain's PDF Loader

loader = PyPDFLoader(file)

doc = loader.load()Split the Document into Chunks

Next, we can use LangChain's recursive text splitter to create chunks of text that overlap by 150 characters.

# split documents into text and embeddings

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=1000,

chunk_overlap=150

)

chunks = text_splitter.split_documents(document)The variable chunks will be a list of text chunks that are no more than 1000 characters, and each chunk will include 150 characters of the chunks before and after it.

Create Embedding Vectors

Next we extract a list of text from the chunks, and submit this list to OpenAI for embedding:

# Create an array of text to embed

context_array = []

for i, row in enumerate(chunks):

context_array.append(row.page_content)

# Submit array to OpenAI, which will return a list of embeddings

# calculated from the input array of text chunks

emb_vectors = embed.embed_documents(context_array) At this point, we have an array of the original text chunks, and a corresponding array of embeddings. We would finish indexing the documents by submitting the text and corresponding embeddings (vectors) to our vector database.

Summary

Storing grounding data for Large Language Model search using Retrieval Augmented Generation is a common pattern and is one of the most economical approaches to leveraging LLMs in delivering conversational user interfaces.

When it's important for user prompts to be augmented with document data according to the underlying meaning of the user's prompt, storing grounding data in a vector database is a common approach.

A common middleware for implementing text extraction and vector embedding is LangChain, which hides many of the implementation details in an abstract SDK.