This post uses Azure AI Custom Vision to create models that are then deployed as Apple CoreML models on iOS devices.

While it's not a prerequisite to have read the post where the object detection model used in this example was designed, if you'd like to review it first, follow this link.

Azure AI Custom Vision is a cloud-first service that provides hosted training and prediction for object classification and prediction models. While cloud deployment makes sense in many scenarios, there are some scenarios where deploying trained models on edge devices delivers the right solution.

Examples of edge model deployment include:

- An IoT camera that should send images to a centralized system only when specific objects are identified within a video feed frame.

- An iOS or Android application that classifies images or performs object detection without sending user-owned images to a cloud-based system.

- An AI Vision application that processes proprietary or sensitive data that isn't permitted to leave an internal network.

Edge Deployment Limitations

Exporting models for edge deployment supports a variety of use cases, but leaving model prediction in the cloud can be the right decision in many scenarios, for example:

- Not all models can be exported. Only Exportable Compact Domain models can be exported and deployed on edge devices. If the perfect Vision model isn't exportable, a cloud deployment may make the most sense.

- If the Vision model will be frequently retrained, it may make sense to host it in the cloud to make the ongoing train-deploy cycles simpler to manage.

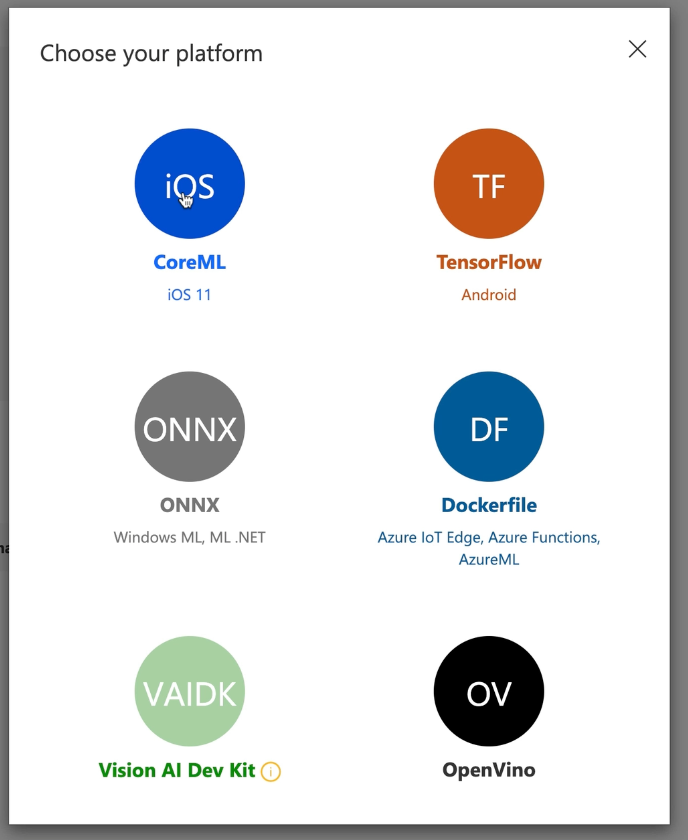

What Export Models are Supported

Azure AI Vision models can be exported in a variety of formats. The following are supported model formats, along with the typical deployment targets.

- CoreML - Apple devices

- TensorFlow - Android devices and JavaScript apps (e.g. React, Angular, Vue)

- ONNX - Open Source format for Windows ML, iOS and Android

- Dockerfile - Windows or Linux containers

- Vision AI Dev Kit - Smart Cameras

- OpenVino - Linux, Windows, macOS, Rasbian OS

Video Demonstration

The demo portion of this post is available in video format! The written post continues after the embedded YouTube video.

Exporting the Model From Azure AI Custom Vision

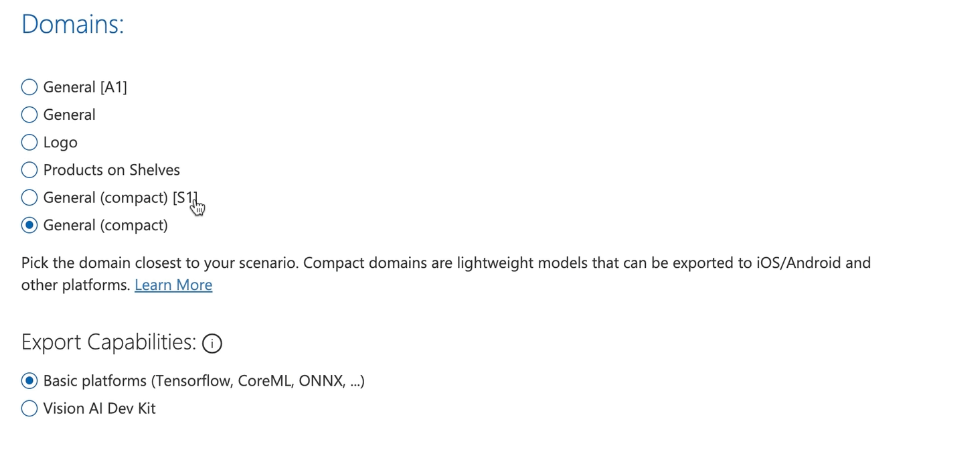

The first step in deploying an AI model to an edge device is to ensure the model select is a Compact model.

Verify the project uses a compact model

If a model isn't compact, the model can be changed to one that is compact by selecting a new model, saving the project, and then retraining the model.

Export the model

When the compact model is trained and validated, the next step is to export the model. This can be done in the Custom Vision portal,or initiated programmatically, i.e. a model can be retrained, exported and extracted as part of a CI/CD process.

Exporting the model creates an export .zip file with assets appropriate for the selected model and iteration.

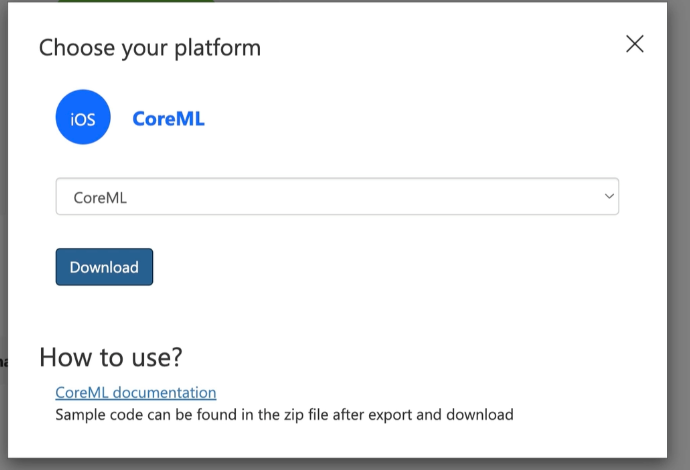

Download the export

Downloading the export essentially downloads a zip file created during the export operation.

Integrating the Model into an Xcode Project

While this post provides an iOS example for model deployment, the process will be substantially similar on other platforms.

When the model .zip file is exported, it can be expanded in a development or CI/CD pipeline, and incorporated in the build of the target iOS device, and becomes a model bundled with the iOS application. In this example, Xcode is used to build a native iOS application using Swift.

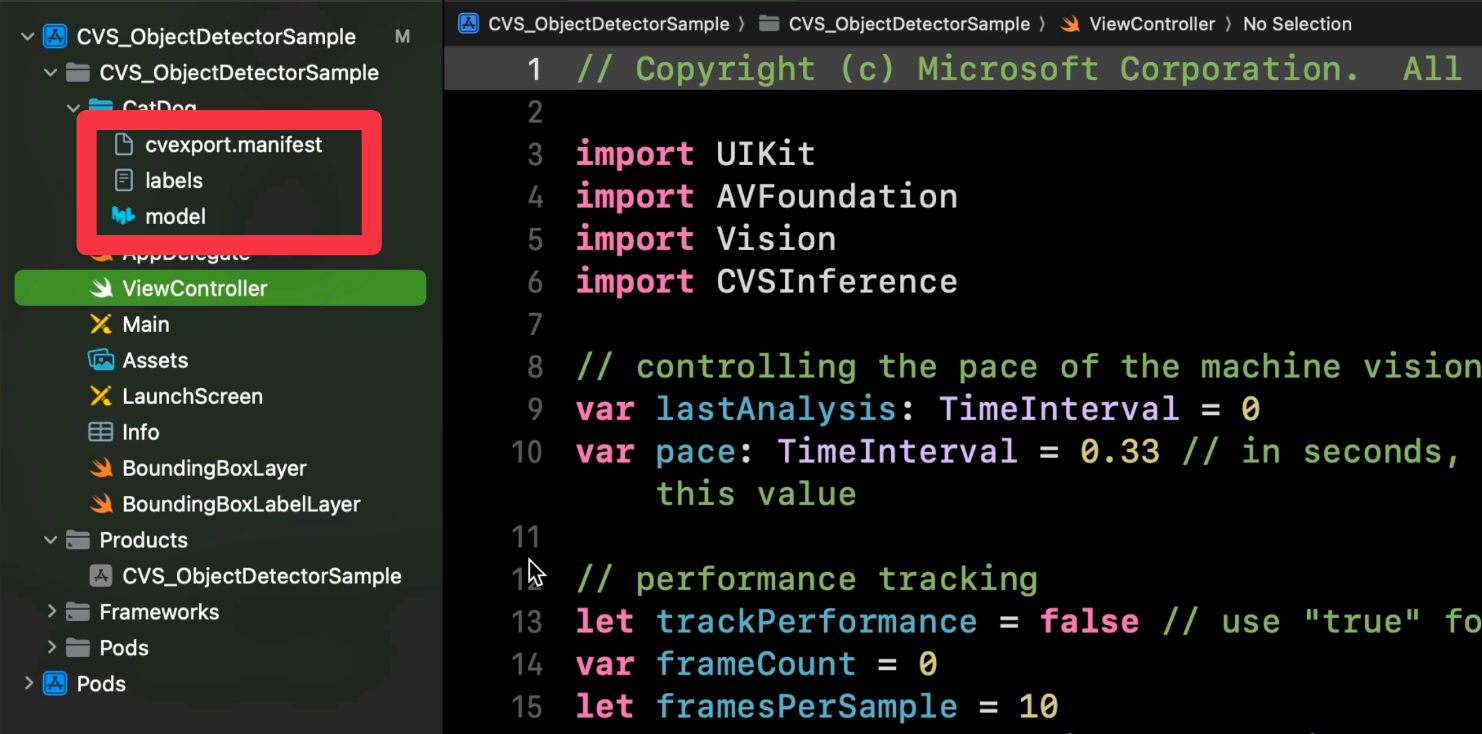

Import the model into the Xcode project

CoreML models have an .mlmodel extension, and are included in the Xcode project along with a manifest and other configuration files.

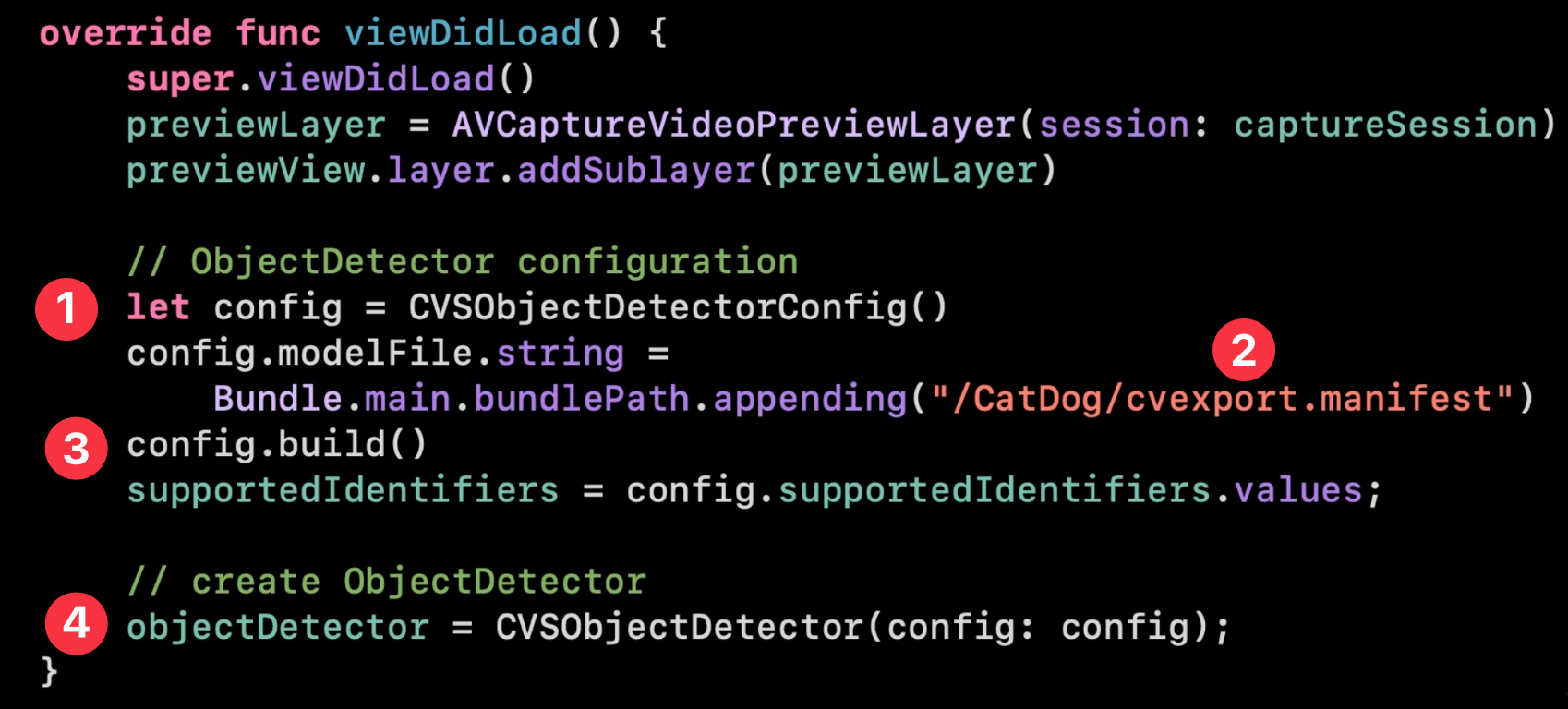

Implement create a prediction model in code

Once the CoreML model is imported to the Xcode project, we then create a model object that can be used in the application to execute predictions directly on the iOS device.

- The

CVSObjectDetectorConfigobject is provided by Microsoft, and is used to configure a prediction model callable at runtime. Thecvexport.manifestfile provided as part of the model download is used to specify the model parameters and source files. - The

build()method reads the configuration parameters into a configuration object. - The code then uses the configuration object to create an instance of

CVSObjectDetector, which is an instance of the model that can be used for object detection by the iOS application.

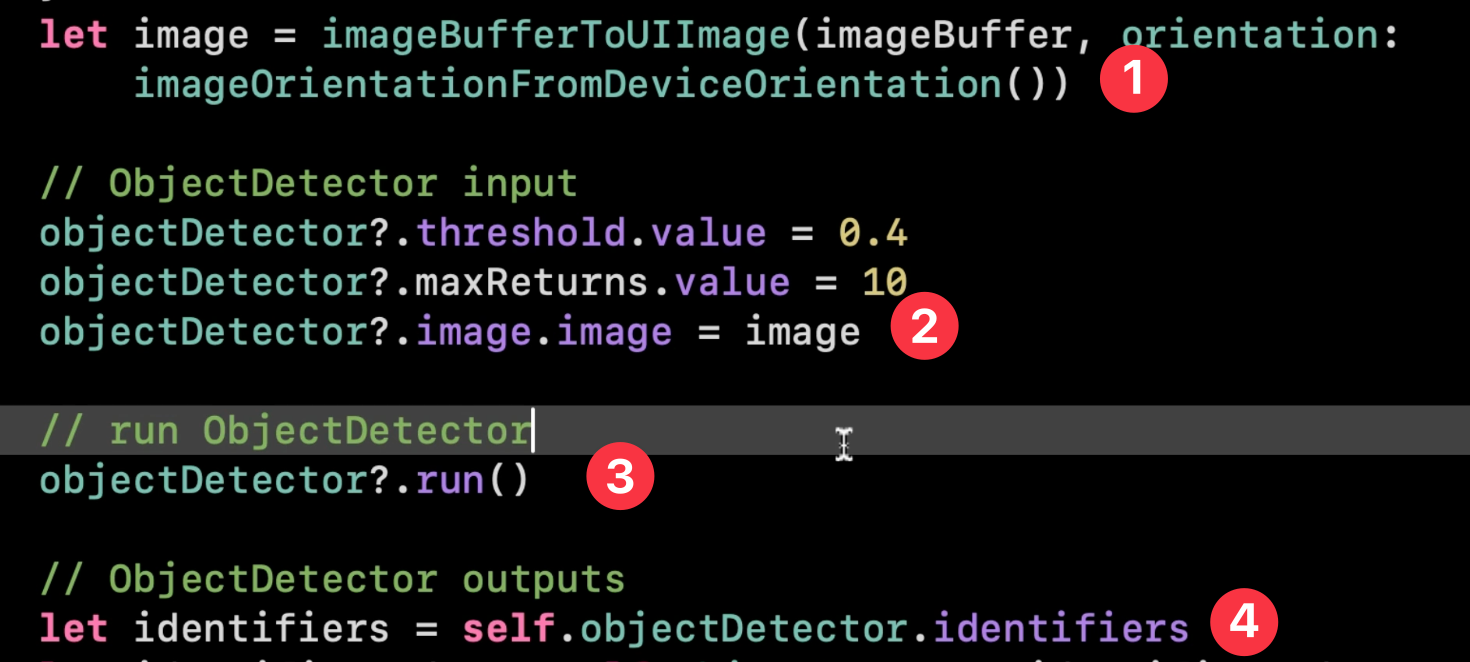

Call the object detection model

Since the Vision model is used for object detection, at runtime we call it using an image as an input, and read the list of objects found by the model.

- The test application reads frames from a video feed, and for each received frame calls the prediction model.

- The image is injected into the

objectDetectorinstance via theimageproperty. - The app calls the

runmethod on theobjectDetector - If any objects (i.e. eggs) are detected in the video frame, the label corresponding to the object found and the bounds within the image where each object was found are returned.

Video frames can arrive at a fast pace, i.e many frames per second. Because the model is deployed to the iOS device, object detection can be made as video frames are extracted to images, resulting in a real-time response for object identifications.

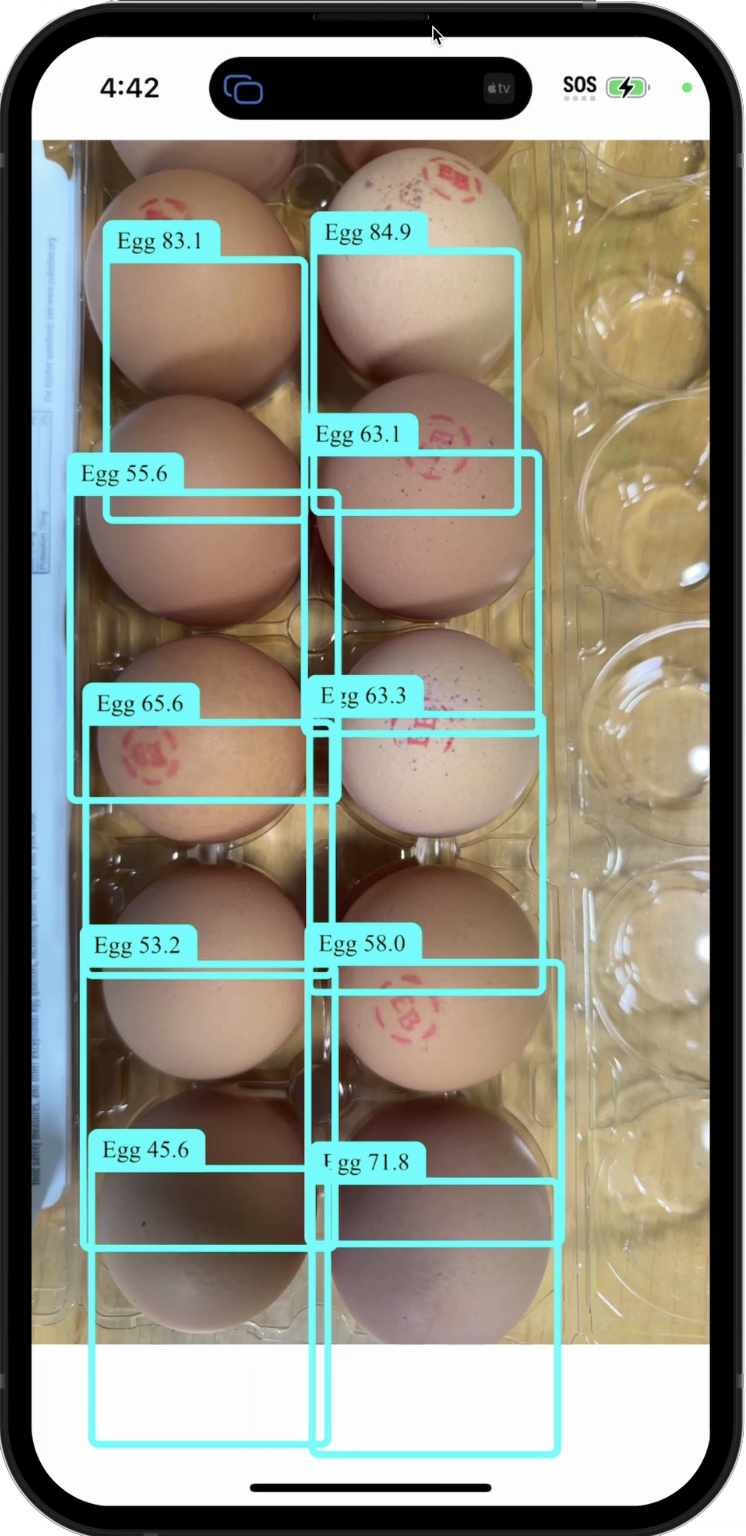

As eggs are discovered, the application responds by drawing labels and bounding boxes around object locations (see below).

Running the App on an iPhone

When the application runs on the iPhone, the user can see in real-time where objects (eggs) are observed in each frame of the video feed.

Summary

Azure AI Custom Vision provides a rich function set for public cloud deployed models. While Custom Vision models provide excellent performance and availability when cloud deployed, AI Vision also supports exporting models for deployment on edge devices.

While not all models supported by Azure AI can be exported (only the Compact models can be), exportable models support many important edge AI use case, such as:

- Devices with poor or no Internet connectivity

- Scenarios where sensitive data should be processed within private networks

- Realtime classification/object detection scenarios where Internet latency wouldn't provide acceptable detection/classification response time.

Many types of edge devices are supported--running a variety of operating systems and having a variety of hardware profiles. Supported platforms include mobile devices (iOS, Android), IoT devices such as web cameras, and even Docker containers deployed in on-premises data centers.