Introduction

When thinking about analyzing data, most of us think about tables containing numbers, categories, and dates. Slicing & dicing business data to gain understanding and insight is a key imperative for most enterprises. Yet in a world filled with cameras and video feeds, multimedia assets have become a valuable source of data as well.

But how can we efficiently analyze large volumes of image content? Historically, image analysis was a highly labor-intensive process involving human review and tagging of images according to presence and significance of image content.

Now that mature AI technologies are widely available, businesses can employ AI models to help analyze multimedia content and draw new insights from assets such as images and video feeds.

Watch on YouTube

The remainder of this post is available in a video format! Or continue reading after the video link.

Azure AI Vision Overview

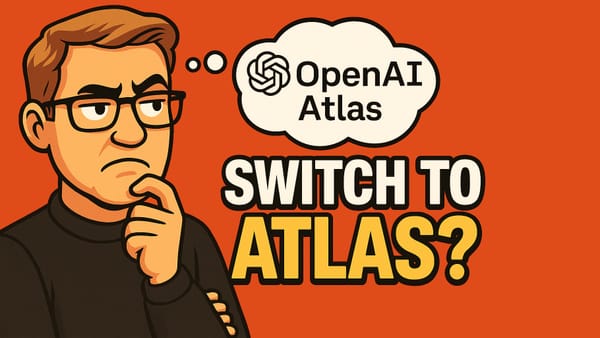

One technology available to enrich image data with descriptive metadata is the Azure AI Vision service. AI Vision is one of many services in the Azure AI set of services. AI Vision provides services to analyze image data and provide text information that describes image content.

Azure AI Vision provides four distinct service categories:

In this post I'll detail a solution that uses Image Analysis to extract tags, categories, landmarks and descriptive text from a library of images. I'll also use Image Analysis to generate thumbnail images that place the main image subject in a prominent position and extract a list of objects found within each image.

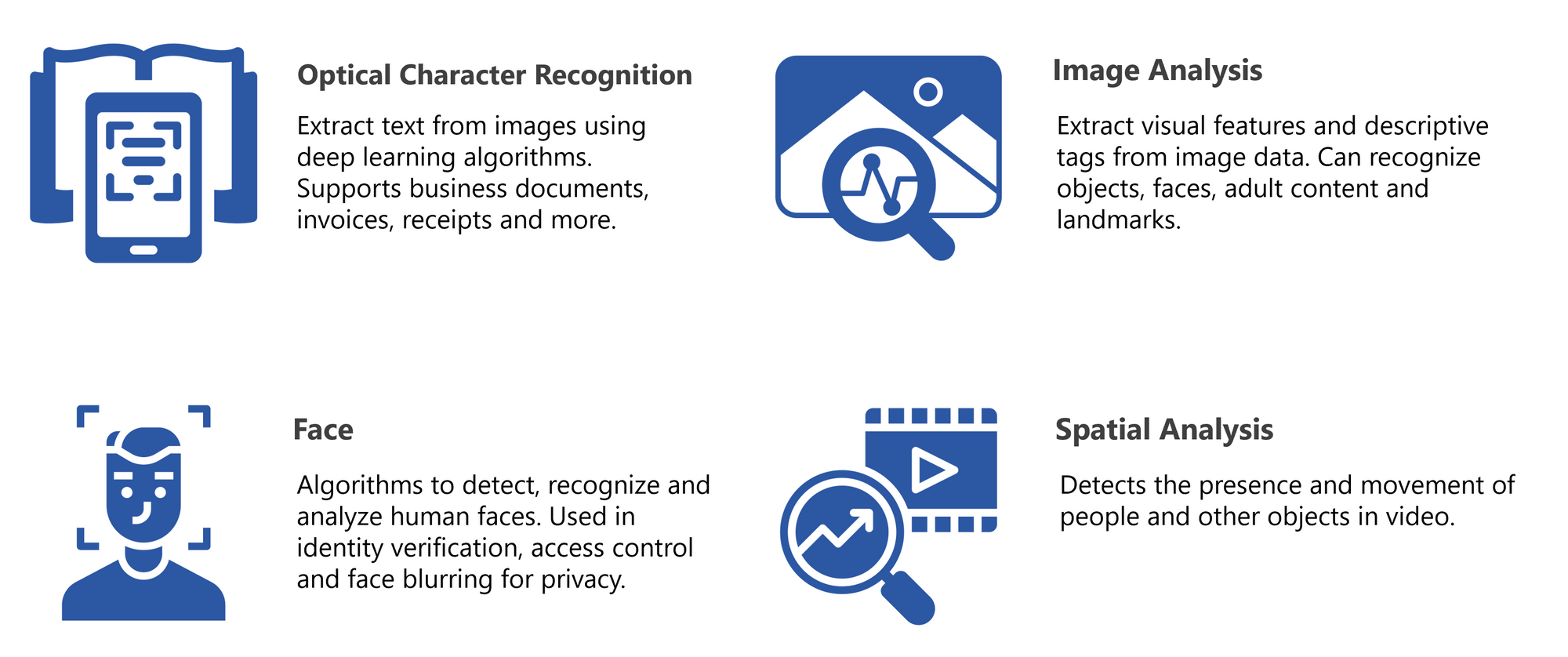

Solution Architecture

This proof-of-concept solution is bult as a C# command-line application that reads data from a local library of images, and performs the following transformations:

- EXIF data fields are extracted from the image (e.g. camera, geo latitude/longitude, camera and lens used, exposure, etc.).

- The image is then submitted to Azure AI Vision for AI processing.

- The high confidence model outputs from Azure AI are extracted from the response.

- The image is submitted again to AI Vision to generate a context relevant thumbnail image.

- The combined image EXIF and AI metadata are added to an Azure SQL Database.

- The original and thumbnail images are uploaded to an Azure Storage Account Blob Storage for use in the Power BI solution.

Submitting Images to Azure AI Vision

Azure AI Vision provides SDKs for many languages, including:

- C#

- Go

- Java

- JavaScript

- Python

- REST

In this example I've used C#, but the same workflow could be implemented using other languages.

Create A Vision AI Client

The first step in using Vision AI to analyze our image is to create a client used to communicate with the service. Essentially this is a higher-level language wrapper around the REST API.

// AI Vision is authorized via a service endpoint and pre-shared key

string cogSvcEndpoint = configuration["CognitiveServicesEndpoint"];

string cogSvcKey = configuration["CognitiveServiceKey"];

// The client object used to connect to AI Vision

ComputerVisionClient cvClient = new ComputerVisionClient(credentials)

{

Endpoint = cogSvcEndpoint

};List AI analysis types

The next step is creating a list of analysis types we'd like the AI Vision service to perform on the image we're about to upload. We do this by creating a list of vision AI analysis types.

// A list of features we'd like AI Vision process when we submit the image

List<VisualFeatureTypes?> features = new List<VisualFeatureTypes?>()

{

VisualFeatureTypes.Description,

VisualFeatureTypes.Tags,

VisualFeatureTypes.Categories,

VisualFeatureTypes.Objects,

};In this solution I'm interested in a one-sentence caption (Descripti0n), and tags, categories, and any objects Vision AI detects within the image.

AI Vision provides a broad set of image analysis features, including:

- Read text (OCR)

- Detect people

- Generate image captions

- Detect objects

- Tag visual features

- Smart crop

- Detect brands

- Categorize images

- Detect faces

- Detect image type (drawing, clip art, etc.)

- Landmarks

- Detect celebrities

- Detect color schemes

- Content moderation

Submit the Image for Analysis

Having an authorized connection to Azure AI and a list of desired features to use, we submit an image to the cloud service for analysis and await the result.

// Load the image from the local file system

var imageData = File.OpenRead(imageFilePath)

// Submit the image to AI Vision, and wait for it's response

var analysis = await cvClient.AnalyzeImageInStreamAsync(imageData, features);// Load the image from the local file system

var imageData = File.OpenRead(imageFilePath)

// Submit the image to AI Vision, and wait for it's response

var analysis = await cvClient.AnalyzeImageInStreamAsync(imageData, features);Read the AI Analysis Results

When the analysis is complete, Azure AI returns an analysis object, which has many embedded objects to expose each analysis output we requested.

We can read any or all of the responses. Each one can be read by iterating over the items found. Here's an example of iterating over each tag found in the image:

// Import any tag found where the Vision AI had a confidence of > 50%

if (analysis.Tags.Count > 0)

{

Console.WriteLine("Tags:");

foreach (var tag in analysis.Tags)

{

if (tag.Confidence > 0.5)

imageProps.tags.Add(tag.Name));

}

}Create a Smart Crop Thumbnail

As part of the Power BI solution, we want to create a square thumbnail to display in a Power BI Table.

The primary subject of an image may not be exactly in the center of the image (and for a well composed photo will rarely be!). AI Vision can help create good thumbnails by analyzing the image, identifying the likely subject of the image, and creating a square thumbnail around that subject.

// Submit the image to AI Vision, asking for a smart cropped thumbnail

var thumbnailStream = await cvClient.GenerateThumbnailInStreamAsync(100, 100, imageData, true);

// Upload the new thumbnail to Azure Blob Storage for use by Power BI

filename = imageProps.id + "_thumbnail.jpg";

blobClient = blobContainerClient.GetBlobClient(filename);

await blobClient.UploadAsync(thumbnailStream, new BlobHttpHeaders {

ContentType = "image/jpeg"

});Add Image to Azure SQL Database

In the example code above, a imageProps object is used to hold all the metadata extracted from EXIF and created by Azure AI Vision.

This post won't go into detail about how to insert this data into a SQL Database, but for sake of completing the code examples, the following code in the example C# program calls a method to save the metadata stored in imageProps to the SQL Database:

bool insertSuccess = database.AddImage(imageProps);Power BI Solution

With the images uploaded and metadata in the cloud database, the data and images are available for analysis in a variety of analytical tools. In this case we'll use Microsoft Power BI.

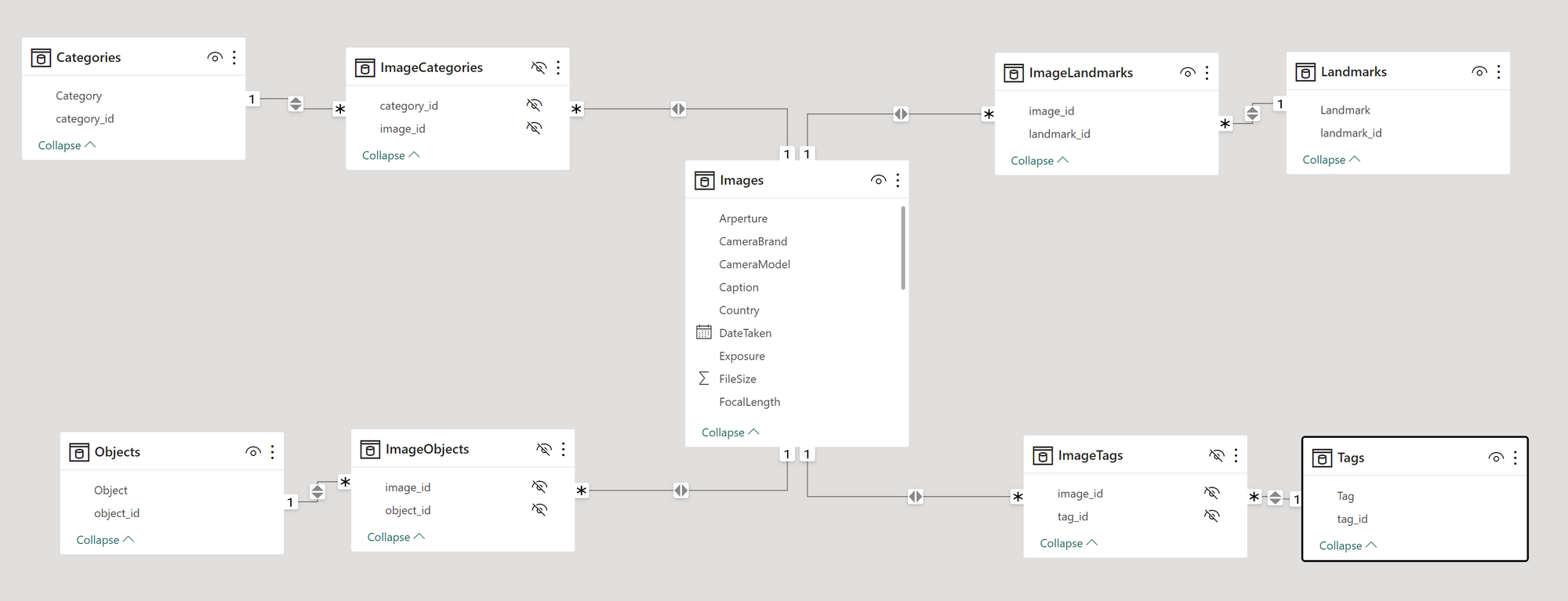

Power BI Data Model

The Power BI Data Model is built directly on the Azure SQL Database, incorporating the Images Table as the primary fact table, and Categories, Landmarks, Objects and Tags as AI-populated dimension tables. Each of the AI dimensions is related to Images in a many-to-many relationship (e.g. an image can have many tags, and a single tag can be related to many images).

Power BI Visualizations

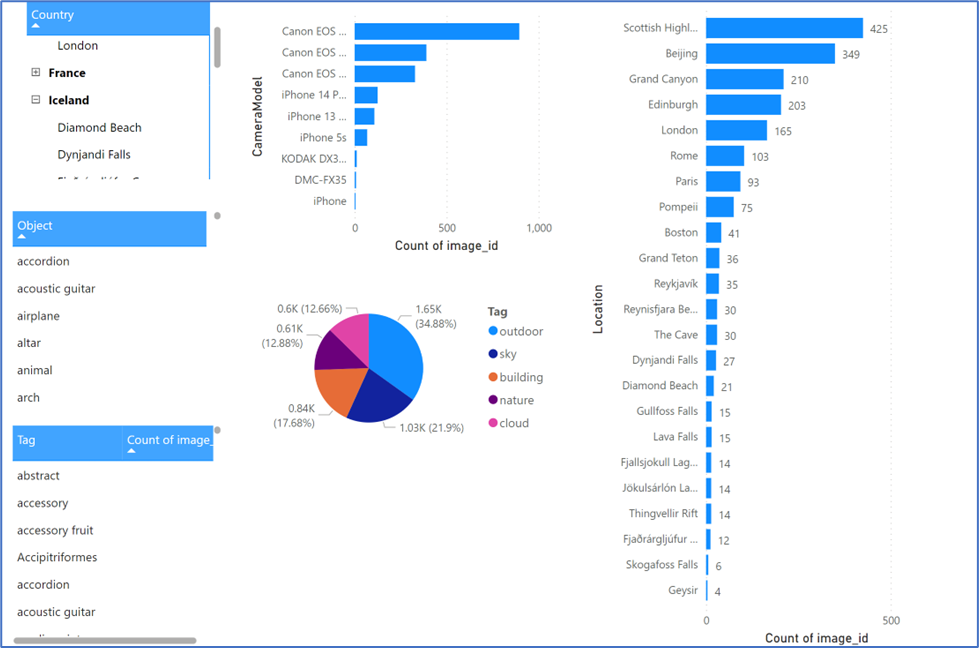

With the AI enriched data read into the data model, we can use Power BI's powerful visualization tools to analyze the photo library quantitatively, using cross-drill across AI and non-AI generated metadata.

Quantitative Analysis

Analysts can slice, dice, and quantitatively analyze descriptive image content extracted via AI models. This allows users to focus only on those images they need to review—rather than poring through an entire image library.

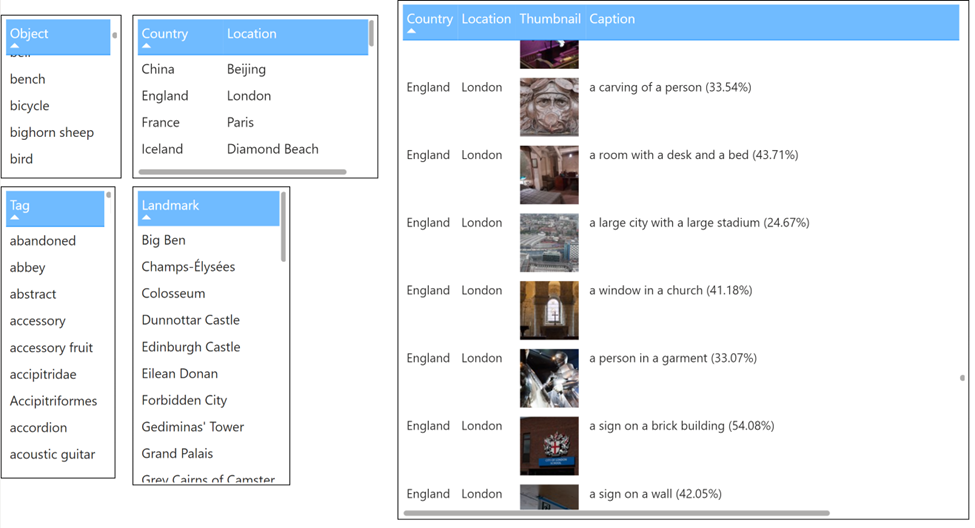

Image Search

The AI-generated metadata also provides new ways to search for content within the images.

During the AI enrichment phase, Azure AI Vision recogizes the contents of images using categories, objects detected and tags.

In the image below, we can search for all images where Vision AI detected a waterfall as part of the image content.

Where to go from here

This post is an example how to use Azure AI Vision's Image to use pre-trained models to analyze images. We were able to extract tags, categories, landmarks and detect objects that are included in the pre-trained models with a relatively low amount of effort and minimal knowledge of how to build data science models for image processing.

If your solution is business or domain specific and needs image analysis using models trained using custom images, Microsoft provides a companion service, Azure AI Custom Vision, which provides the ability for developers to define and train custom models to cover domains that may not be part of the pre-trained Vision models. Of course, it's completely possible to leverage both pre-trained and custom models when it makes sense.