Microsoft Fabric supports Jupytr notebooks for interactive data engineering, data science and data analytics. Often when analyzing or processing data we use external services that require secrets such as API keys or username/password pairs for access.

Storing secrets within Notebooks is not a good practice, as they can potentially be leaked to unauthorized users. The best way to store secrets is to use Azure Key Vault, where access to secrets can be tightly controlled through Role Based Access Control (RBAC).

Azure Key Vault

Azure Key Vault (I'll refer to it as AKV from here) is a core Azure infrastructure service which can store secrets in an encrypted key/value store, while providing programmatic access to secrets only to authorized users.

Why We're using Azure Key Vault

In this post, I'm going to create a key vault, store an Azure AI Services access key in the vault, then fetch the key within a Fabric Jupyter notebook where I want to leverage AI Services directly to process a Spark dataframe.

Since I'll store my Jupyter notbook in Fabric where any other workspace user can see it (and later store the notebook in GitHub where anyone having access to my repo could also see it), I want to use AKV I don't want the raw secret in the notebook!

A secondary reason to use AKV is so that when the key is rotated (changed), the new value can be updated in AKV, and the notebook that uses the key will continue to work normally.

Enough talk – let's create a new vault and store a key inside!

Create the Key Vault

A new AKV can be created from the Azure portal, but in this example I'll use the Azure CLI.

First, I'll create the new vault. Note that an AKV name must be globally unique across all customers (much like a storage account name). This name becomes the first segment of the AKV URL.

az keyvault create --name <unique-keyvault-name> \

--resource-group <group name> \

--location <region>Next, I'll add my Azure AI access key to the vault.

az keyvault secret set --vault-name <unique-keyvault-name> \

--name AZURE-AI-SERVICES-KEY \

--value "XXXXXXXXXXXXXXXXXXXXXXXXXXXX" That's it for the AKV setup. From now I can access the value of AZURE-AI-SERVICES-KEY from code, such as Python code in a Jupyter notebook.

Fetch the Key from a Notebook Cell

Now that the key is in AKV, its available to be fetched from the Jupyter notebook, so let's do that next.

In a notebook cell, enter the following code:

#1

from trident_token_library_wrapper \

import PyTridentTokenLibrary as tl

#2

key_vault_name = '<unique-keyvault-name>'

key_name = "AZURE-AI-SERVICES-KEY" # key name added to vault

# 3

access_token = mssparkutils.credentials.getToken("keyvault")

# 4

ai_services_key = tl.get_secret_with_token( \

f"https://{key_vault_name}.vault.azure.net/", \

key_name, \

access_token)- First we import the

PyTridentTokenLibrarydependency. This is used to fetch tokens used to access Azure services. Note that Fabric was called "Trident" during development. Look for this package name to change at some point in the future(?). - Next we define some constants for the key vault name and the name of the key we want to get access to.

- In the third step we fetch a token from Fabric that we can use to access the AKV. This token is based on the notebook credential (for an interactive notebook, this is our logged-in credential).

- Finally we fetch the AKV value fro the key defined by

key_name

Use the Secret Key to call the Azure Service

Now that we've stored the service key in the ai_services_key python variable, we can use it!

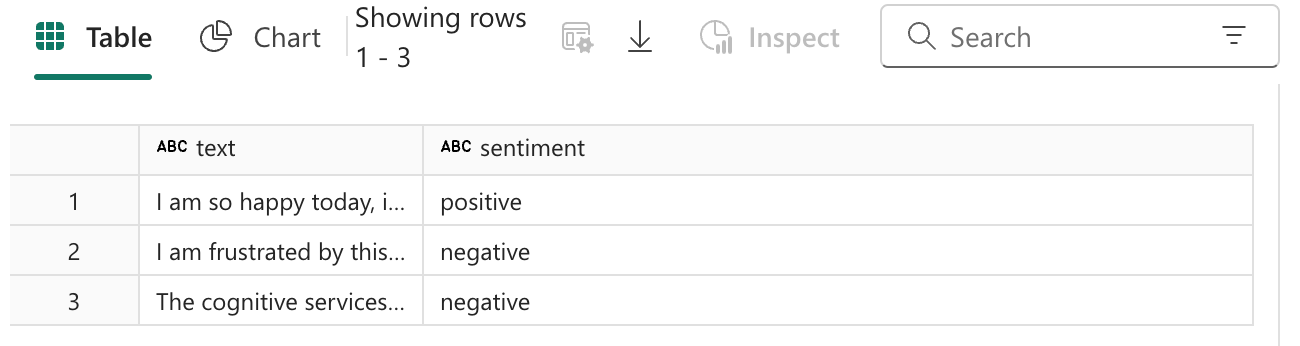

display(ai_services_key) will not display the secret value, even though the variable can be passed to other function calls. This is a security mask applied by Fabric.For the purposes of demonstration we'll create an in-memory data frame with static data, then call Azure AI's Sentiment Analysis service via the SynapseML wrapper object TextSentiment to append a sentiment colum to the original dataframe.

df = spark.createDataFrame(

[

("I am so happy today, its sunny!", "en-US"),

("I am frustrated by this rush hour traffic", "en-US"),

("The cognitive services on spark aint bad", "en-US"),

],

["text", "language"],

)

# Run the Text Analytics service with options

sentiment = (

TextSentiment()

.setTextCol("text")

.setLocation(service_loc)

.setSubscriptionKey(service_key)

.setOutputCol("sentiment")

.setErrorCol("error")

.setLanguageCol("language")

)

# Show the results of your text query in a table format

display(

sentiment.transform(df).select(

"text", col("sentiment.document.sentiment").alias("sentiment")

)

)The output of the display function shows that a sentiment column has been added to the data frame via the Azure AI call.

Summary

And that's a wrap! In this post we created an Azure Key Vault, added a secret, then pulled the secret into our Jupyter notebook. This technique will work with any external service that requires secrets, tokens or username/password pairs.