What You’ll Learn

• How anything can be represented as a vector — including images

• How to generate image embeddings using an OpenAI multimodal model

• How to store vectors efficiently using ChromaDB, a high-performance vector database

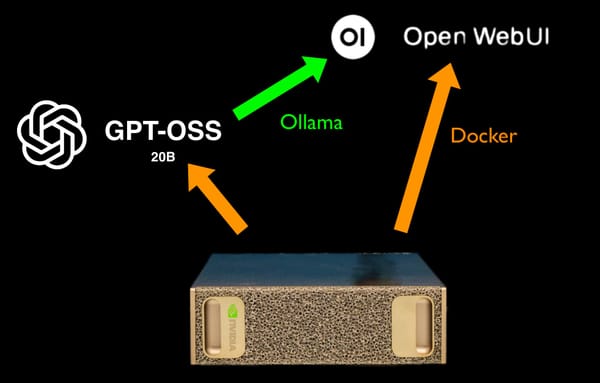

• How LLM microservices (running on vLLM) power the semantic search logic

•A deep dive into the architecture, design decisions, and multi-service setup

• A full demo of the final solution live in the browser

You’ll see exactly how these pieces fit together to deliver an end-to-end multimodal AI experience.

Source Code

Source code from the video is available on GitHub for review, reuse, and extension:

https://github.com/robkerr/robkerrai-demo-code/tree/main/dgx-spark-image-vectorization