Fabric Table Architecture

One significant benefit to using a Fabric Lakehouse as part of a data analytics architecture is the use of the open Parquet data structures. At the storage level, these tables can be created as easily as importing a CSV file with column headers!

When Microsoft designed Fabric's data storage architecture, they built-in a SQL access path that can be used by applications that support SQL Server drivers, e.g. ODBC and OLEDB. This is a great benefit, as any application that can use a SQL Server connection string – millions of them! – can query and read data in Fabric tables directly.

Connecting to a Fabric Table from External Applications

Microsoft's documentation provides high-level information about the support provided for connecting to a Fabric data warehouse from SQL Management Studio, Azure Data Studio, and 3rd-party applications via ODBC and OLEDB.

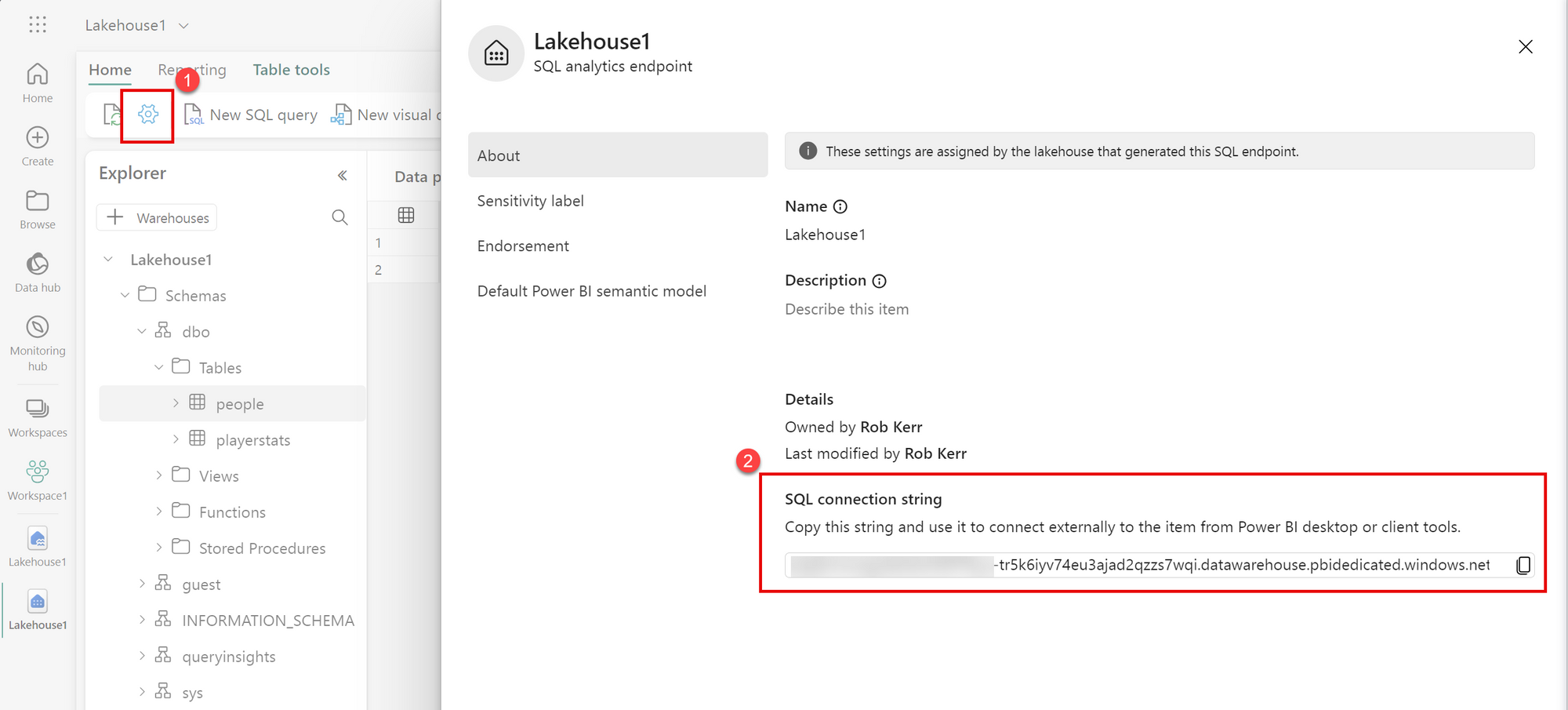

In each case, the elements of a SQL Connection string are needed. This connection string is created when a Fabric Lakehouse is first provisioned, and can be copied from the Fabric UI.

Now that we have the connection string, we need to think about how to authenticate a custom application to Fabric.

Fabric SQL Endpoint Security

Traditionally when connecting to SQL Server using an OLEDB or ODBC connection string, we can authenticate using either a SQL Login (username/password) or using Integrated authentication (e.g. the Windows identity of the currently logged in user).

Fabric SQL Connection Strings support two types of user authentication, both of them tied to Microsoft Entra ID identities.

- Microsoft Entra ID (formerly Azure Active Directory) user principals, or user identities

- Microsoft Entra ID (formerly Azure Active Directory) service principals

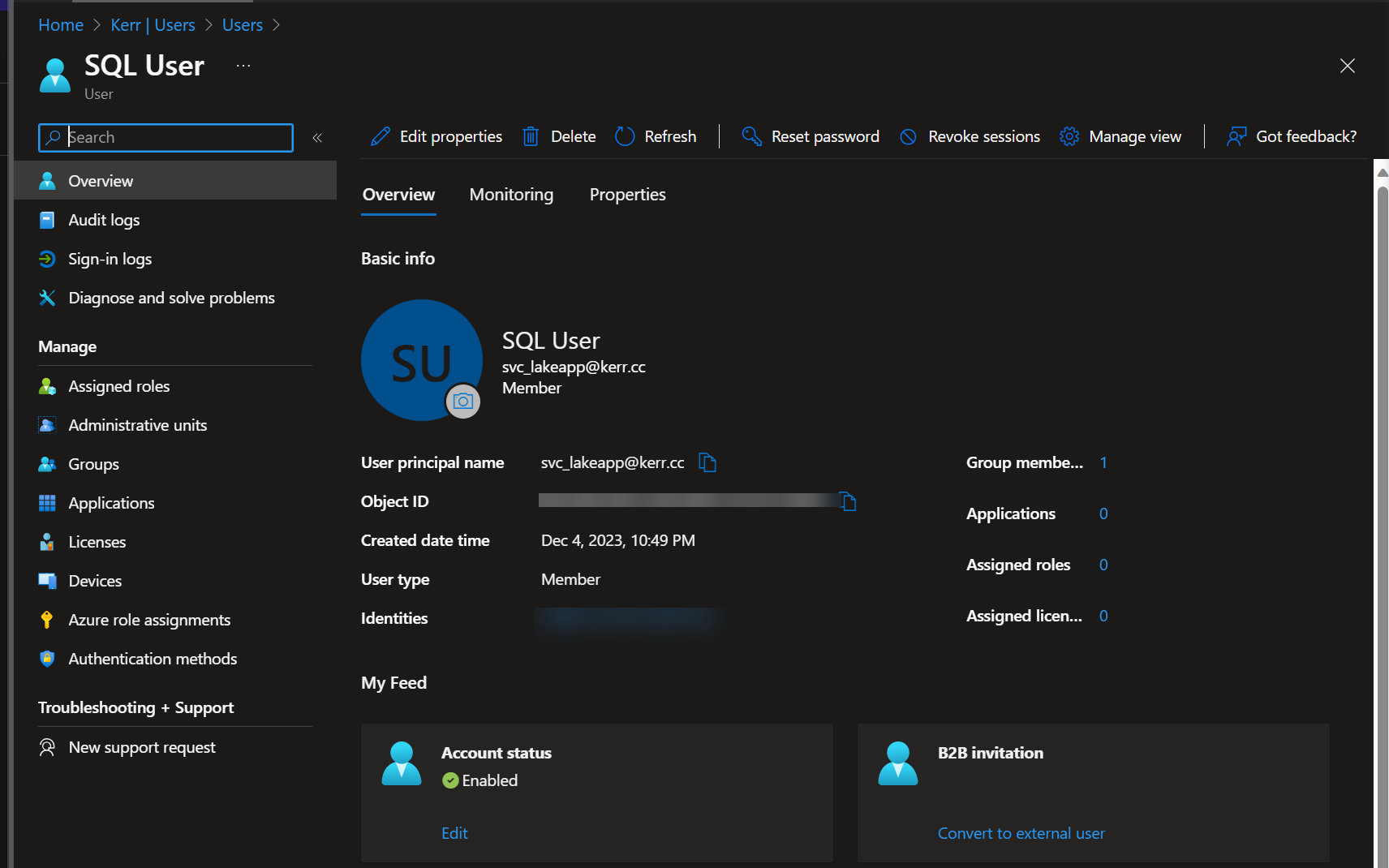

For this post, I'm going to write a simple Windows Console app to query the Fabric table, so to keep things easy I'm going to create a user identity in Entra ID and use that account in the SQL connection string.

Create an Account in Entra ID

For my demo, I created a new user in Entra ID, as follows:

Note that when using a user account like I have, there is a benefit that it can be used outside the Azure environment, but by default Entra ID accounts must use Multifactor Authentication (MFA). For a service account this isn't ideal, so disabling MFA for the user account is likely necessary for production applications.

This can be accomplished in a couple ways:

- Set your tenant to apply MFA per-user, and do not require it for the service account.

- Use Entra ID conditional access rules to not require MFA for service accounts.

Querying Lakehouse Data using SQL

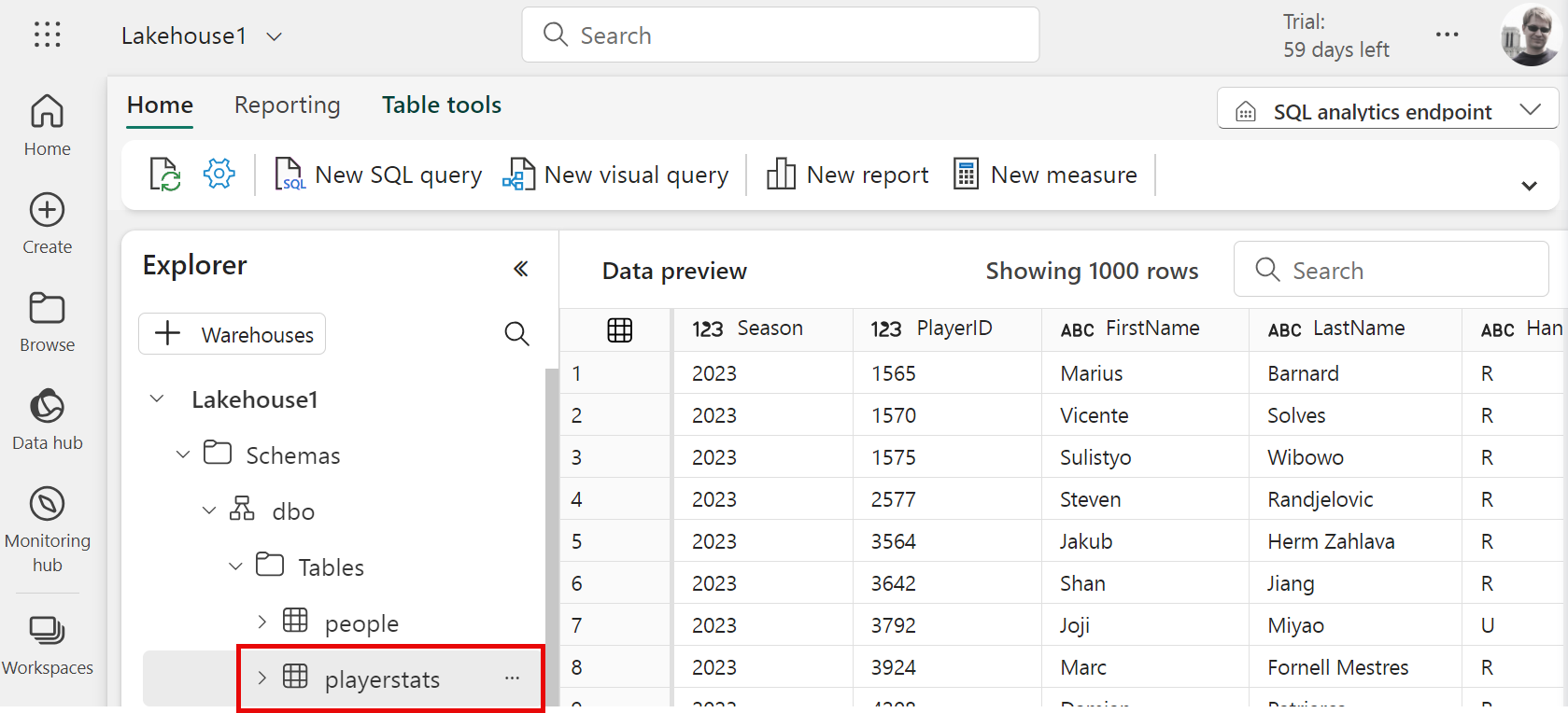

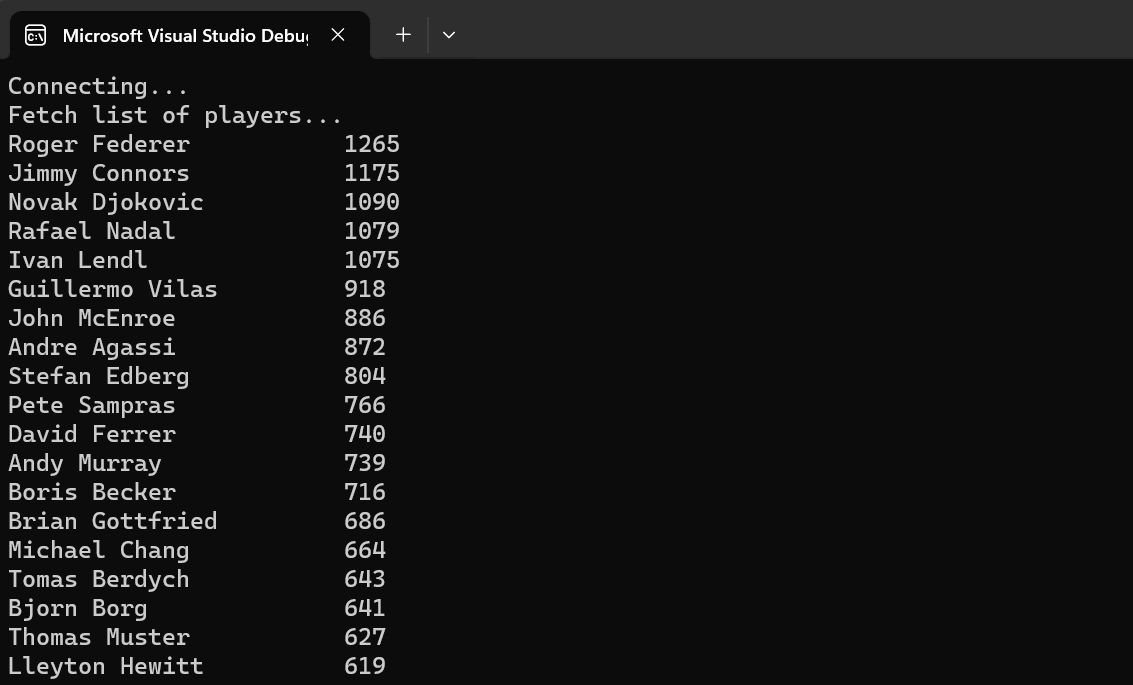

Within Lakehouse1 I have a table I created called playerstats, which contains information on historical stats for professional tennis players.

I'm going to query this table directly from a local C# app. Here's what the source table looks like from the Fabric console:

Query from a C# Application

Now that we have the infrastructure setup, let's use the SQL Connection string and Entra ID login to connect to the Fabric Lakehouse and query data from a table!

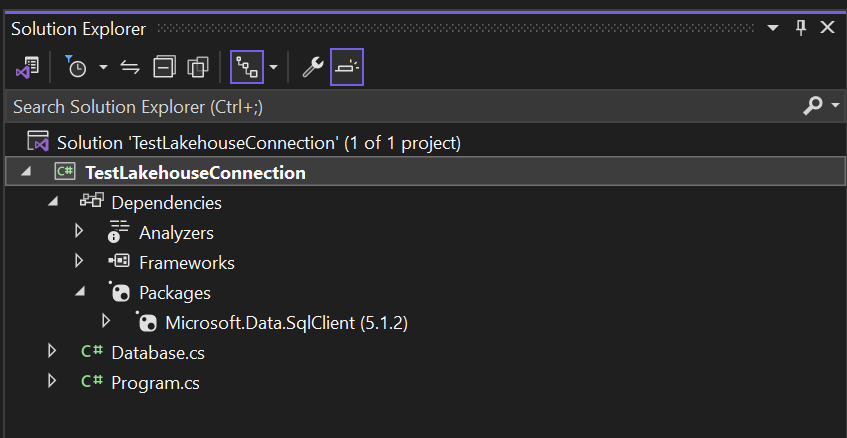

Create a new C# Application

I created a new application in Visual Studio and used NuGet to add the Microsoft.Data.SqlClient dependency to the solution. This is the only dependency we'll need for this solution.

Create a SQL Connection String

To connect to Fabric and query the table, we'll form a SQL Connection string for SqlClient. This is the connection string we need:

Data Source=*****.datawarehouse.pbidedicated.windows.net,1433;

Initial Catalog=Lakehouse1;

Authentication=ActiveDirectoryPassword;

User Id=svc_lakeapp@kerr.cc;

Password=********;The connection string is the same that I copied from the Fabric Lakehouse settings (see above). I've masked the first part of the connection string and Entra ID password, so be sure to use the real values from your own environment in these fields.

Connect to the Lakehouse Using SqlClient

Within the C# application, we can then connect to the Lakehouse in a manner the same as usual!

SqlConnection conn = new SqlConnection(connectionString);

conn.Open();Easy!

Query the Lakehouse Table

Again, the process of querying the Lakehouse table is the same as any other SQL query. In this case, I'll query the FirstName and LastName columns from a Lakehouse table called playerstats. Of course, I can use typical SQL syntax to filter and sort results, which the Lakehouse compute engine will process for us.

SqlCommand command = new SqlCommand(@"SELECT LastName, FirstName, Wins

FROM playerstats

WHERE Wins > 0

ORDER BY Wins DESC", conn);

using (SqlDataReader reader = command.ExecuteReader())

{

while (reader.Read())

{

Console.WriteLine($"{reader["FirstName"]}

{reader["LastName"]}\t\t{reader["Wins"]}");

}

}And that's it! Here's the first few rows of the query results:

Limitations

Querying Lakehouse tables from custom applications makes the Lakehouse more versatile and opens a wide range of integration possibilities. There are a couple limitations to be aware of when envisioning solutions:

Entra ID Required

Lakehouse supports the Entra ID principals listed above, and doesn't have support for the legacy SQL ID (username/password).

However, since ActiveDirectoryPassword is supported in the connection string, we can still connect external, non-Azure hosted applications. However, coordinating an appropriate service account is an extra complexity.

Read-only

The Lakehouse SQL connection string at this writing is read-only, so it cannot be used to update or delete data in Lakehouse tables. Doing so will result in an error.

For analytical, reporting and extract use cases, this presents no problem at all, but if the scenario is to maintain master data in the Lakehouse (for example), another solution such as Data Mirroring or traditional ETL/ELT jobs should be used.

Get the Code

Try it yourself by downloading the code!