In this video you’ll learn how to:

• What fine-tuning is, how it compares to RAG (Retrieval-Augmented Generation), and when to use each

• Coding a PyTorch fine-tuning script for small models

• Formatting custom training data for LLMs

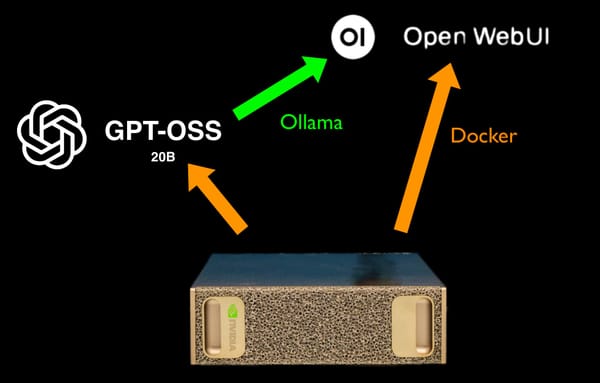

• Running fine-tuning jobs in a Docker container

• Merging fine-tuned adapters into a single model

•Quantizing the model to run efficiently on edge or local devices

• Testing your fine-tuned model on a desktop with LM Studio

If you’re a developer or AI engineer who wants to get hands-on with customizing small language models, this deep-dive is for you.

Link to Github repo with scripts and data used in the video:

Link to NVIDIA Spark DGX Training with PyTorch Playbook: