When a machine learning model is validated and effective at predicting outcomes, the next step is putting them to use in production applications. An excellent way to integrate AI and ML models with other applications is publishing a predictive model as a REST web service endpoint--accepting JSON inputs and emitting predictions as JSON objects.

What is Azure Machine Learning?

Azure Machine Learning is a managed, enterprise-grade machine learning platform that enables Data Scientists to collaborate together to create machine learning models.

Azure ML includes the following features:

- A collaborative workbench environment

- A data repository to manage large data sets, including version management

- Web-based Jupyter notebook editing and versioning.

- On-demand compute clusters for model training.

- REST endpoint deployment of registered models

- MLflow support

- A robust set of APIs with support for Python, R, Java, Julia and C#

- Connectivity to a variety of data sources, including Azure Blob Storage, AWS S3, Azure Data Lake, Databricks and Snowflake

- Access to a curated model catalog with models OpenAI, Hugging Face, and more.

Like other large cloud vendors, Microsoft is making big investments to provide an enterprise platform for businesses to add AI and ML workloads into their cloud deployments. Microsoft has developed a very complete offering and is a leader in the Gartner Magic Quadrant for AI Development Services.

What is Model Endpoint Deployment?

When we've invested many hours of Data Scientist and compute time into creating a model that has the potential for huge business impact, we need to get that model out of the lab and into production.

One way to publish a model so it can be incorporated into other applications is by embedding the algorithm the model provides within a RESTful web service endpoint, which has built-in support in Azure Machine Learning.

While REST isn't the only type of web service (GraphQL has been growing in popularity), REST is by far the most common way for back-end services to be consumed by client applications. Because of this, exposing a model via a REST API call makes it immediately understood and available to the developer of virtually any application that can use its services.

How do I deploy Azure ML Models to REST Endpoints?

For a detailed walk-through of the deployment process, check out the embedded video demonstrating the entire process! If you'd rather read through the highlights, the text continues after the embedded video.

Training a Model

Before publishing an endpoint, we have to train a model that will respond to application requests.

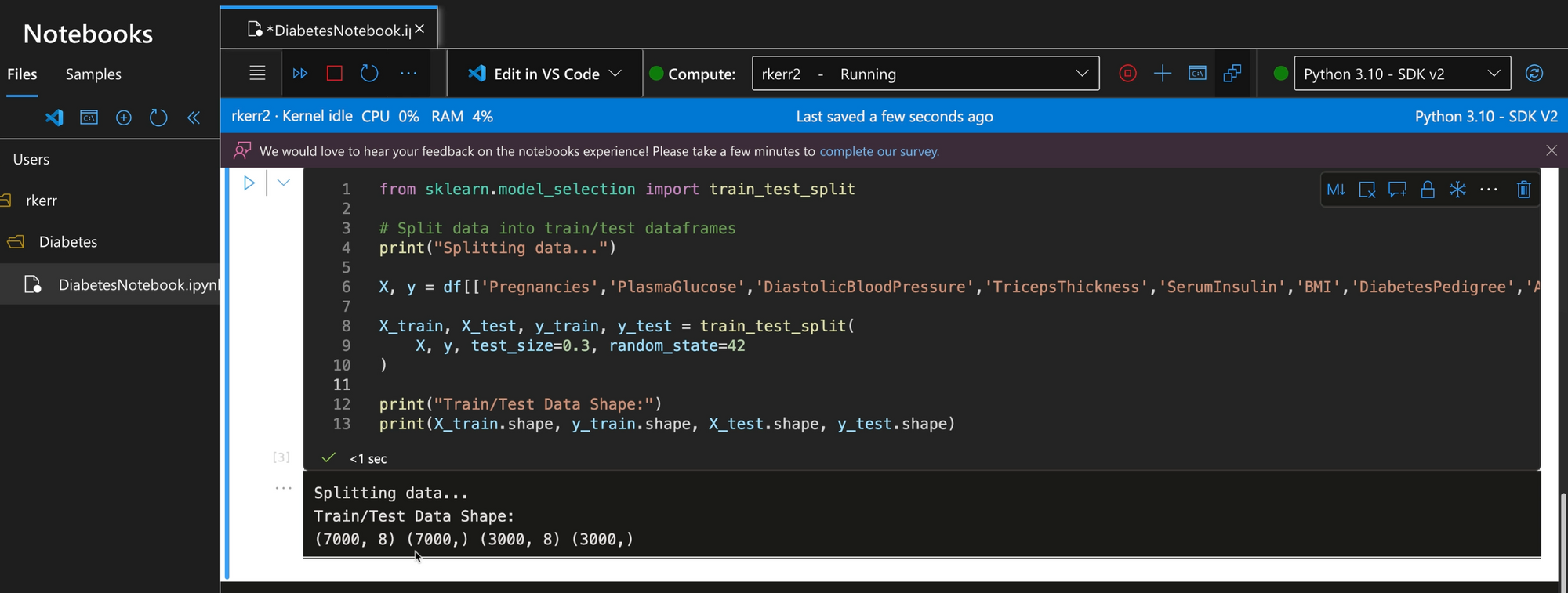

Model design in Azure ML can be done using Jupyter Notebooks and Python, or using a visual designer. Most full-time data scientists tend toward using Jupyter notebooks.

In the image below, Python is being used in a notebook to train a prediction model using the open source Scikit-Learn toolkit.

Model training can be very CPU/GPU intensive, depending on the algorithms used and data volume. When using Azure ML, it's most common to. use Azure-provisioned compute clusters (although using local compute, i.e. your laptop computer, is supported).

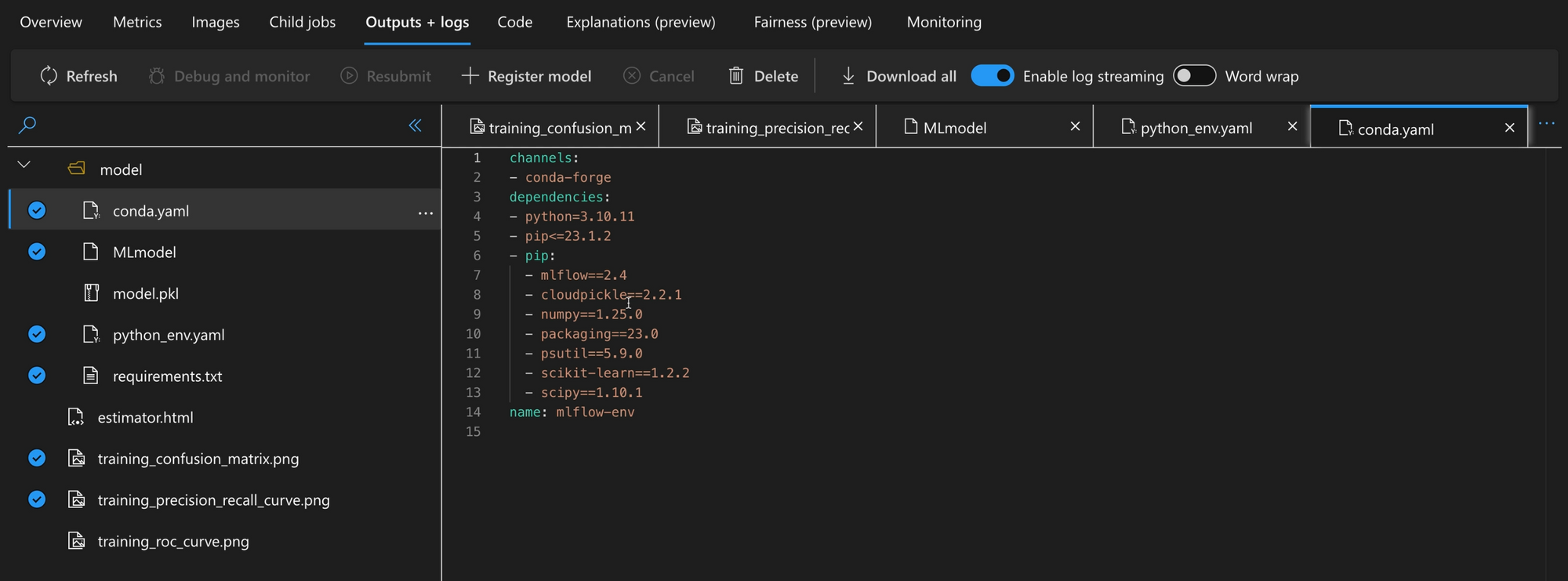

Registering a Model

Once a model is trained and validated to accurately create its desired predictions, the next step is to register it with Azure ML. This step adds the files needed to deploy the model to the Azure ML workspace.

Once a model is registered, it's available to be published by an Azure ML managed endpoint.

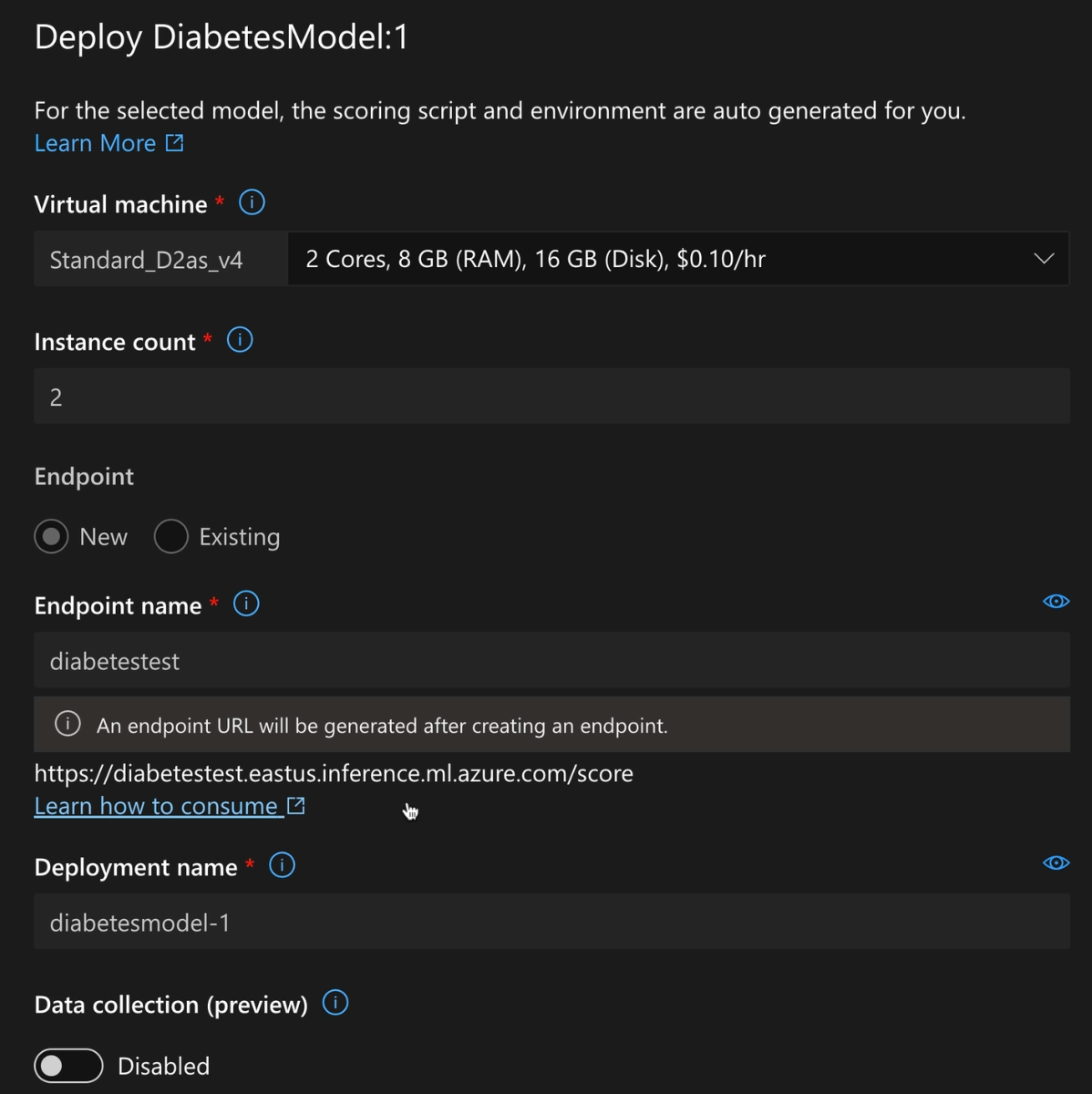

Deploying an Endpoint

A registered model can be deployed either from the Machine Language Studio (below), or from a CLI shell script--enabling an MLOps approach enabling automated deployment to endpoints of retrained and re-validated models.

While Models can be deployed in many ways--even exported to other cloud providers--the Azure ML managed endpoints provide the ability to manage the entire process within one environment.

Azure ML managed endpoints under the covers are Azure compute nodes configured in scalable clusters.

Once the model is deployed, they're available via standard RESTful POST calls from any authorized application.

Testing the Endpoint

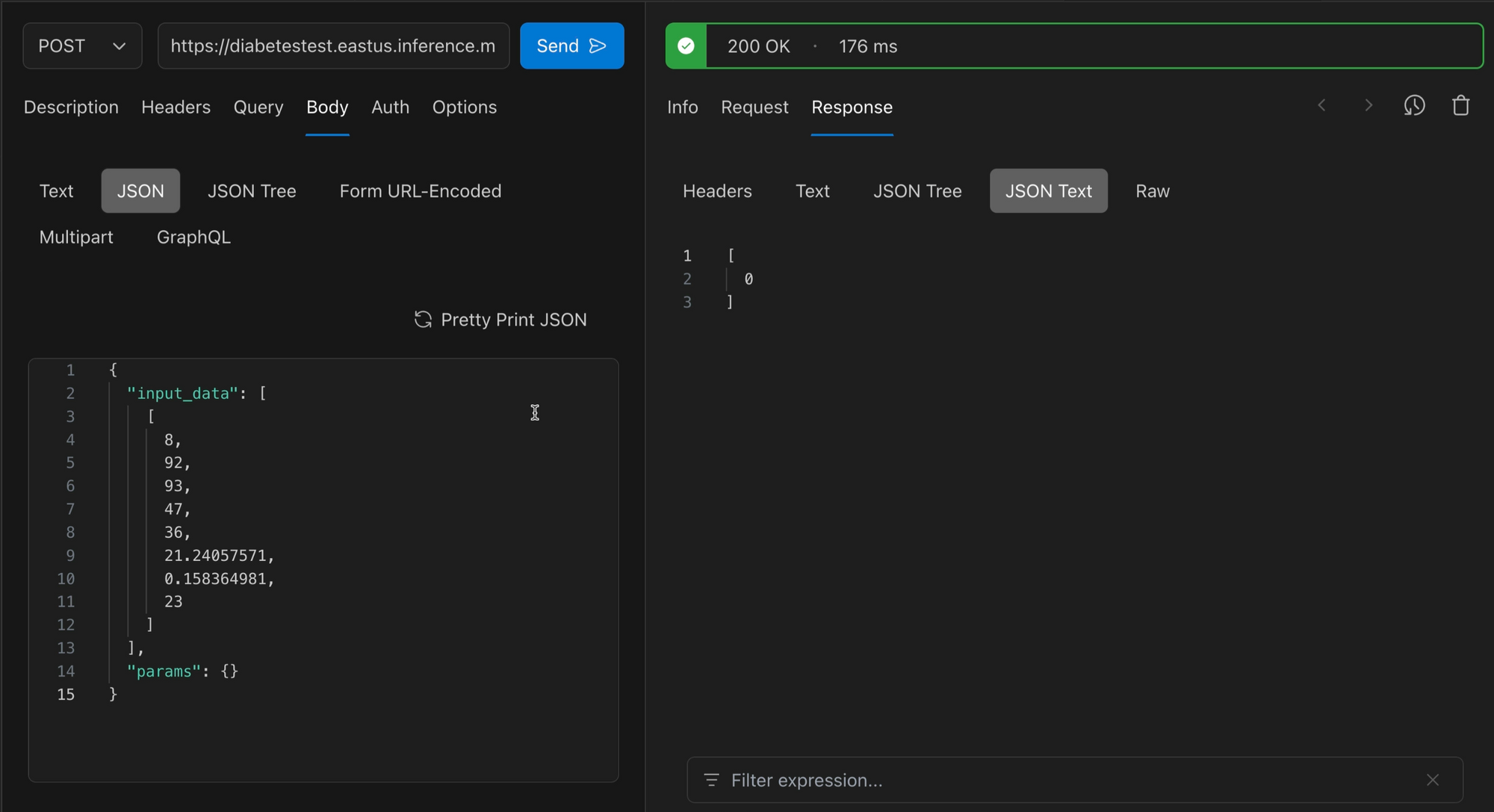

After deployment is complete, an Azure ML Model can be called like any other RESTful endpoint. For example, using the RapidAPI testing tool, we could call a Model's managed endpoint as below.

In the above test call of a Diabetes prediction model, the input_data payload includes eight input parameters to a Decision Tree model. The Response reports a 0 if the likelihood of Diabetes is low, and 1 if the likelihood is high.

Summary

Artificial Intelligence and Machine Learning have become more accessible and cost-efficient to implement and integrate with business applications. Many factors have contributed to accelerating adoption of AI in line-of-business and high-tech applications:

- Lower costs for compute

- Availability of elastic, scalable compute clusters

- Low-cost storage

- Availability of enterprise-grade SAAS Data Science tooling

As Machine Learning models become more ubiquitous, there are more opportunities to incorporate predictive analytics into line of business applications. Streamlined deployment environments like Azure Machine Learning make it easier than ever.